Apple has long carried itself as a staunch defender of user privacy. CEO Tim Cook has even asserted privacy is a “fundamental human right,” and Apple’s track record has supported this notion. For example, Apple resisted the FBI’s attempts to access iPhone networks for the sake of monitoring and catching suspected terrorists and mass shooters, realizing the dangerous precedent that could be set by allowing government entities to access individuals’ private messages without a warrant.

Despite the prospect of real threats to the public, the right to privacy is viewed as critical by most Americans. For example, law enforcement requires warrants based on probable cause before the contents of an electronic device can be searched. On the surface, the update seems like a practical tool to identify and abhorrent content like child pornography. However, Apple’s latest technology update represents a fundamental shift away from previous policies and highlights serious privacy concerns raised by the ire of privacy advocates. Even Apple employees have raised trepidations about the company’s new child safety tools.

Last Thursday (8/5), Apple announced a series of updates to its iCloud photo upload process that will take effect when iOS 15 debuts. Photos stored in the cloud will now be scanned and analyzed by an algorithm to ascertain if they qualify as child sexual abuse material (CSAM). The process is complex and involves device software matching photo characteristics to the database of known CSAM and comparing them to see if there is a match. According to Apple, only images uploaded to iCloud, not merely on an individual device, can be reviewed. If the image meets a certain “threshold” (which Apple has not quantified), the media content will be reported to the National Center for Missing and Exploited Children. Concerns over false positives are already being raised. Still, the company maintains that the software is “nearly flawless,” with a less than 1 in a trillion chance of content being incorrectly flagged. However, the legal and ethical questions extend about false identification, rare as it might be, and privacy.

In simplest terms, this latest iOS update will result in photo libraries being scanned by Apple, highlighting a perceived change of Apple’s commitment to protecting user data. iCloud photos are data encrypted, but Apple possesses a “key” to that data, meaning the data can be “unlocked” by Apple. This fact raises issues in the context of law and government. This issue is not unreasonable as this technology could be morphed and used in other nefarious ways.

Undoubtedly, these announcements will get continued media scrutiny, and how consumers might react to them remains uncertain. Even if these new identification algorithms are highly accurate, a single error that might wrongly accuse an individual of having illegal content on their devices could damage Apple’s well-deserved reputation for protecting privacy.

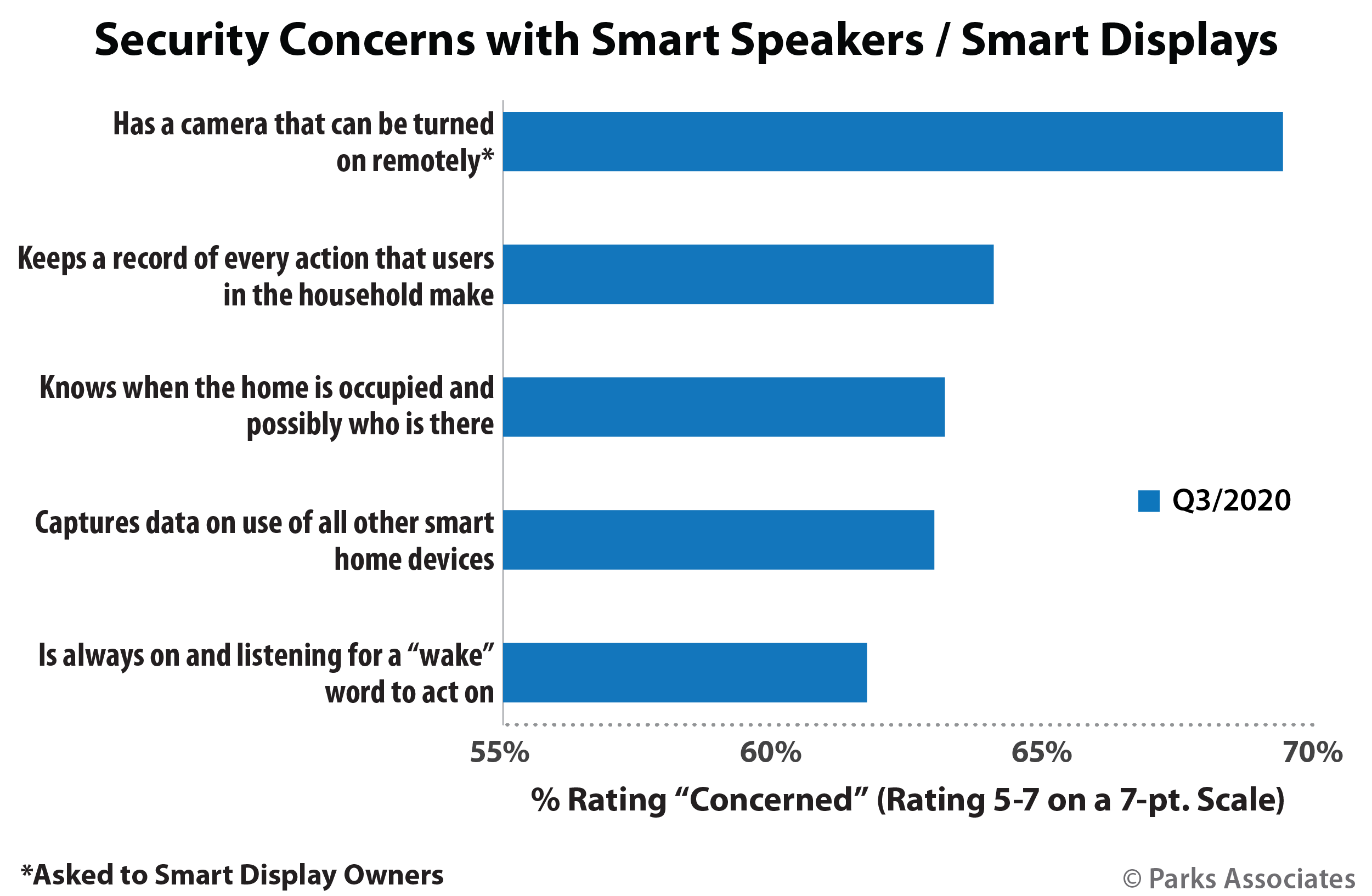

Parks Associates data consistently finds that consumers are concerned about the privacy of their data across their connected lives:

- 58% of home network router owners are highly interested in a feature that restricts outside access to personal information in the home (Quantified Consumer – Adoption and Perception of Broadband)

- 63% of smart speaker and smart display owners are concerned that the device may keep a record of every action that users in the household make; the same percentage are concerned that the device knows when the home is occupied and possibly who is there. (Smart Speaker, Smart Display Market Assessment)

- Data privacy and security concerns are a top 3 purchase inhibitor for smart home devices (Quantified Consumer: Smart Home - Consumer Purchases and Preferences)

Are you interested in more exciting and compelling insights into the consumer tech market? Sign up for updates via this link and watch or listen to the SmartTechCheck podcast by Parks Associates on YouTube and Apple Podcasts.